The Trump administration has dramatically expanded the use of artificial intelligence (AI) in immigration enforcement. With new platforms like ImmigrationOS and contracts with Palantir, AI now drives everything from data analysis to raids, arrests, and deportations. While touted as cost-saving and efficient, critics warn that these opaque systems pose risks of bias, errors, overreach, and lack of accountability.

Introduction

Artificial intelligence (AI) is no longer limited to consumer apps, self-driving cars, or financial trading. It is now at the center of U.S. immigration enforcement policy, reshaping how DHS and ICE track, arrest, detain, and deport migrants.

The move is framed as a way to increase efficiency, reduce costs, and handle massive backlogs of cases. But beneath the rhetoric lies a troubling reality: AI is making high-stakes enforcement decisions that affect human lives, often with little transparency or oversight.

AI-driven immigration enforcement impacts both citizens and non citizens alike, raising concerns among civil society organizations about the broad reach and consequences of these technologies.

This article provides a comprehensive look at:

- What ImmigrationOS is and how it works

- Why Trump’s administration has made AI a priority in deportation strategy

- What data sources feed into AI-driven immigration enforcement

- The risks: bias, errors, overreach, and erosion of due process

- Comparisons with Europe’s AI Act

- The bigger picture: AI, civil liberties, and immigrant communities

What Is ImmigrationOS?

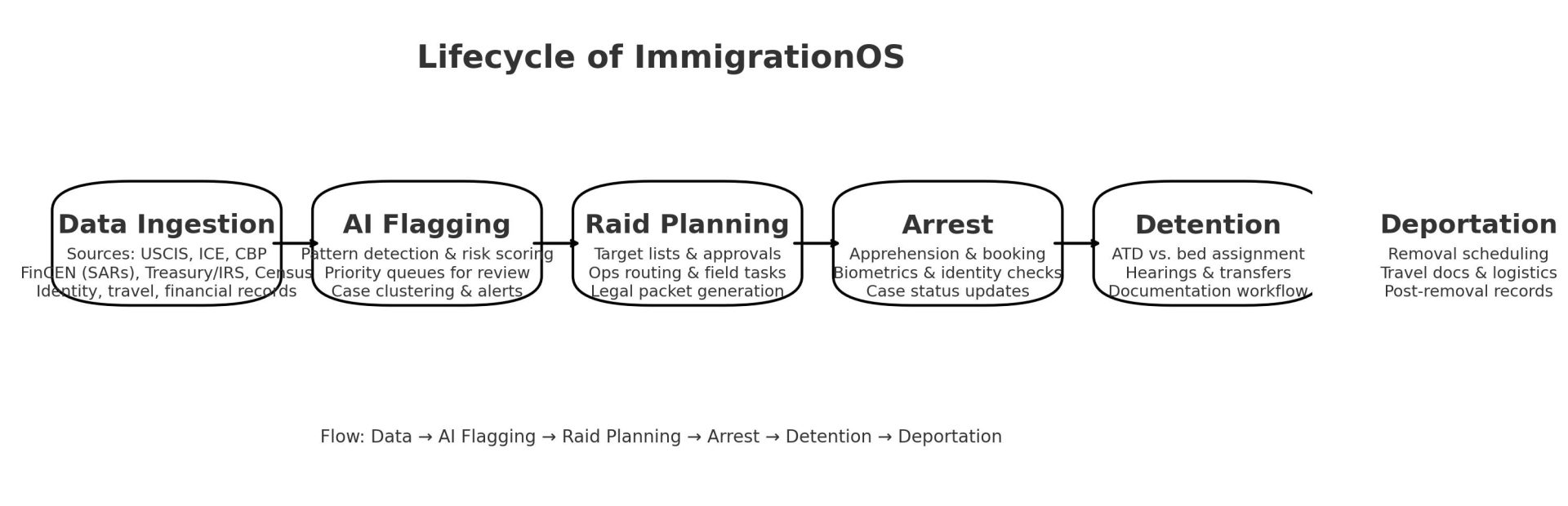

At the heart of the Trump administration’s immigration-AI expansion is ImmigrationOS, a centralized AI-powered platform described by DHS as a “command center for immigration control.” ImmigrationOS exemplifies agency use of AI technologies in immigration enforcement, demonstrating how government agencies implement and regulate these systems.

Core Capabilities Include:

- Data Fusion: Merges relevant data from immigration files, USCIS systems, ICE databases, suspicious activity reports (SARs), Bank Secrecy Act (BSA) flagged transactions, and even IRS and U.S. Census Bureau data.

- Raids & Arrests: Agents can plan and execute operations, log arrests, and automatically generate legal paperwork.

- Lifecycle Routing: The platform routes individuals through detention and removal pipelines.

- Decision Guidance: AI flags “suspicious” individuals, prioritizes enforcement targets, and recommends actions.

A DHS official summarized:

“You can log into the system, carry out a raid, get approval, and route individuals to detention or removal. The entire lifecycle is built into the software.”

This is a leap from older, fragmented tools — a one-stop deportation operating system.

Why Trump Is Accelerating AI in Immigration

The Trump administration has been explicit: deportations must rise, costs must fall, and enforcement must move faster. These actions are part of a broader immigration enforcement policy and reflect the administration’s overall immigration policy objectives.

Claimed Benefits of Immigration AI

- Faster Processing: Algorithms can scan thousands of files in seconds.

- Cost Savings: Reduces need for manual review and extra staffing.

- Better Coordination: ICE, CBP, USCIS, Treasury, and IRS share data seamlessly.

- Enforcement Force Multiplier: Allows ICE to expand operations without hiring thousands more officers.

- Adoption of New Tools: The administration is deploying new tools, such as AI-powered monitoring systems, facial recognition, and mobile forensic tools, to enhance enforcement capabilities.

The $30 million investment in ImmigrationOS earlier this year is part of a larger trend of funneling DHS resources into digital enforcement rather than human adjudicators.

Transparency and Accountability: The Missing Piece

Critics argue that AI-driven enforcement poses severe risks to fairness and civil liberties.

- Algorithmic Bias: AI systems can reflect and even amplify existing biases present in historical data or enforcement practices, leading to discriminatory outcomes. This perpetuation of existing biases raises concerns about fairness and the potential for reinforcing pre-existing social injustices.

Main Concerns

- Algorithmic Bias: AI can replicate and amplify racial, ethnic, and socioeconomic bias.

- Opaque Flagging: Individuals may be flagged without explanation, making it nearly impossible to contest decisions.

- Overreach: Tools meant for counterterrorism or anti-money-laundering are now used for ordinary immigration cases.

- Vendor Lock-In: Palantir’s dominance risks entrenching DHS in a closed ecosystem.

- Civil Liberties Threats: Combining immigration, financial, and census data into a deportation machine raises due process concerns. AI-driven surveillance and facial recognition technologies also pose significant privacy violations, as government monitoring can intrude on individuals’ privacy rights and undermine civil liberties. Legal cases and policies increasingly address these infringements, highlighting the risks of technological overreach.

What Data Sources Feed the AI?

ImmigrationOS pulls from vast amounts of sensitive data sources, including:

Immigration Databases:

USCIS ELLIS

- DHS/USCIS PIA-056 — USCIS ELIS (official landing page). U.S. Department of Homeland Security

- USCIS ELIS — Latest PIA Update (PDF, Sept. 2024). U.S. Department of Homeland Security

ICE EARM (ENFORCE Alien Removal Module, within EID)

- EARM 3.0 — PIA Update (PDF). U.S. Department of Homeland Security

- EID (the database that EARM uses) — PIA Update (PDF, Dec. 2018). U.S. Department of Homeland Security

- EID — Original PIA (PDF, Jan. 2010). U.S. Department of Homeland Security

- DHS PIA Collection entry noting EARM access to EID. U.S. Department of Homeland Security

These databases may also serve as training data for AI algorithms used in immigration enforcement, impacting the accuracy and fairness of automated decision-making systems.

Suspicious Activity Reports (SARs):

- Filed by banks to FinCEN under the Bank Secrecy Act

Financial Transactions:

- Wire transfers, flagged banking activity

IRS Records:

- Tax filings and payment histories

Census Data:

- Household demographics, addresses

Other Federal Sources:

- Treasury, State Department, and even Social Security records

This expansion means that applying for a mortgage, filing taxes, or even filling out the Census could indirectly flag you in an immigration database.

AI in Action: Current Uses

Existing DHS AI Applications

- Identity Verification: Biometrics, fingerprints, face scans, and face recognition

- Fraud Detection: Spotting patterns in visa and green card applications

- Alternatives to Detention: Deciding who gets electronic monitoring

- Internal Chatbots: Staff-facing systems for inquiries

New Enforcement Uses Under Trump

- Flagging individuals for raids

- Prioritizing deportation trains

- Automating arrest paperwork

- Routing detainees into ICE detention beds

- Using AI in the intensive supervision appearance program to assess detainees’ risk levels and determine detention or surveillance measures

This represents a shift from “supportive” AI to “coercive” AI.

U.S. vs. European Union: A Tale of Two Approaches

The European Union’s AI Act (effective August 2025) treats immigration enforcement as a high-risk application requiring strict safeguards. This approach reflects the EU’s commitment to advancing governance of AI in immigration enforcement, focusing on developing robust policies and legal frameworks to ensure oversight and accountability.

EU AI Act Key Requirements:

- Mandatory impact assessments

- Full transparency in how AI is used

- Right of appeal for individuals affected

- Independent regulators overseeing compliance

- Severe penalties for violations

By contrast, the U.S. currently has no overarching AI law governing DHS or ICE. Oversight is mostly internal and opaque.

📌 See European Commission: Artificial Intelligence Act.

Risks for Immigrants

Immigrants face unique dangers under AI-driven enforcement:

- Faulty Data: Errors in IRS or immigration databases can trigger raids.

- Surveillance Expansion: Financial and demographic records used for immigration control.

- No Transparency: Immigrants may not know why they were targeted.

- Misidentification: Facial recognition errors have already been documented.

- Disparate Impact: Minority communities, undocumented immigrants, and other marginalized communities are disproportionately flagged and face heightened risks from AI-driven enforcement.

- Biased Immigration Decisions: AI systems can unfairly influence immigration decisions, potentially leading to biased or erroneous outcomes for individuals seeking status, asylum, or entry.

FAQs: Artificial Intelligence in U.S. Immigration Enforcement (ICE & USCIS)

How is social media surveillance used in AI-driven immigration enforcement?

AI systems increasingly rely on social media surveillance, collecting data from social media platforms to monitor, flag, and track migrants and visa holders. This data is analyzed to identify potential threats, associations, or policy violations, raising concerns about privacy, due process, and the risk of misinterpretation.

Why should I read news articles about AI and immigration enforcement?

Staying informed by reading news articles helps you understand the latest developments, legal changes, and community impacts of AI-driven immigration enforcement. News coverage can alert you to new technologies, policy shifts, and grassroots efforts to protect civil rights.

What is the role of translation services in AI processing of immigration applications?

AI algorithms often process non-English applications using translation services. Errors or biases in translation can affect outcomes, as AI may misinterpret or discredit translated content, impacting fairness for applicants who rely on these services.

How are AI algorithms and machine learning models used in immigration enforcement?

AI algorithms and machine learning models are used for facial recognition, language analysis, risk assessment, and predictive decision-making. These models help law enforcement agencies flag individuals, prioritize cases, and automate parts of the enforcement process.

What is generative AI and how is it used in government surveillance and immigration control?

Generative AI refers to systems that can create new content, such as text or images. In immigration enforcement, generative AI can be used to synthesize data, generate risk profiles, or simulate scenarios, but it also raises risks of bias, misinformation, and privacy violations.

What legal challenges have involved Clearview AI in immigration enforcement?

Clearview AI, a facial recognition company, has faced lawsuits over privacy violations. Its technology, used by law enforcement agencies to identify individuals from internet images, has been challenged in separate cases for overreach and misuse, especially in immigration contexts.

What is the hurricane score algorithm and how does it affect deportation risk assessment?

The hurricane score is an AI algorithm used by DHS to assess the risk of individuals absconding or missing deportation appointments. Critics argue its opacity and potential bias can unfairly influence critical decisions about immigrants’ lives.

How is geospatial modeling used in tracking migration patterns and border crossings?

Geospatial modeling analyzes geographic data to monitor border crossings, track migrants, and assess the impact of AI-enabled surveillance on migration flows. This technology helps law enforcement agencies optimize border security and resource allocation.

How does AI track migrants at border crossings?

AI technologies, including facial recognition and geospatial modeling, are used at border crossings to track migrants. These systems monitor movement, flag individuals for further scrutiny, and integrate data from multiple sources, raising privacy and human rights concerns.

What is the involvement of law enforcement agencies in AI-driven immigration enforcement?

Law enforcement agencies, such as ICE and DHS, deploy AI tools for surveillance, data analysis, and enforcement actions. They collaborate with technology vendors and share data across agencies, which can complicate oversight and accountability.

How does AI impact public safety in immigration enforcement?

AI is used to enhance public safety by identifying potential threats and streamlining enforcement. However, overreliance on AI can lead to civil rights violations, wrongful targeting, and erosion of trust in law enforcement agencies.

How do power differentials affect the use of AI in immigration?

AI can reinforce existing power differentials by amplifying systemic inequalities in the immigration system. Those with fewer resources or legal support are more vulnerable to errors, bias, and lack of recourse, while privileged groups may better navigate or resist these imbalances.

What role do civil rights groups and civil society organizations play in AI oversight?

Civil rights groups and civil society organizations advocate for transparency, accountability, and the protection of civil liberties in the use of AI for immigration enforcement. They challenge government overreach, call for independent audits, and support affected communities.

What rights and risks do US citizens face in AI-driven immigration enforcement?

US citizens can be mistakenly flagged by AI systems, facing surveillance or enforcement actions due to data errors or algorithmic bias. Legal protections exist, but the risk of overreach and due process violations remains a concern.

How are visa holders affected by AI surveillance and enforcement?

Visa holders are subject to AI-driven monitoring, including social media surveillance and risk scoring. AI can flag visa holders for alleged associations or policy violations, sometimes leading to visa revocation or increased scrutiny without clear recourse.

How does state legislation influence data sharing and privacy protections in immigration enforcement?

State legislation can regulate how data is shared and protected, setting privacy standards that affect the use of AI in immigration enforcement. These laws vary by state and can provide additional safeguards or, in some cases, enable broader data access.

How have separate cases in the courts shaped AI regulation in immigration?

Separate cases in federal and state courts have set important precedents for the limits and oversight of AI surveillance in immigration. Judicial rulings help define due process rights, restrict certain technologies, and influence agency practices.

What is the role of executive orders in directing agency actions on AI and immigration?

Executive orders are formal directives from the President that can mandate federal agencies to adopt, regulate, or restrict AI technologies in immigration enforcement. These orders shape national policy without requiring new legislation.

What other forms of surveillance technology are used alongside AI?

Other forms of surveillance include mobile forensic tools, license plate readers, and social media monitoring. These technologies, combined with AI, expand the government’s ability to collect and analyze data, raising additional privacy and oversight concerns.

How is AI used to combat child sexual exploitation in immigration contexts?

AI assists law enforcement agencies in identifying, preventing, and investigating child sexual exploitation cases, including those involving migrants. AI tools analyze digital evidence, flag suspicious activity, and support prosecutions to protect vulnerable children.

What is the government’s role in deploying AI for immigration enforcement?

The government’s use of AI in immigration enforcement involves deploying surveillance technologies, setting policy through executive orders, and overseeing agency operations. This raises questions about transparency, accountability, and the balance between security and civil liberties.

Who is Samuel Norton Chambers and what is his research focus?

Samuel Norton Chambers is a researcher known for his work on AI, surveillance, and human rights. His research examines the impact of AI technologies on privacy, due process, and the rights of migrants and marginalized communities.

Visual Explainers

- Flowchart: Lifecycle of ImmigrationOS (data → raid → arrest → detention → deportation)

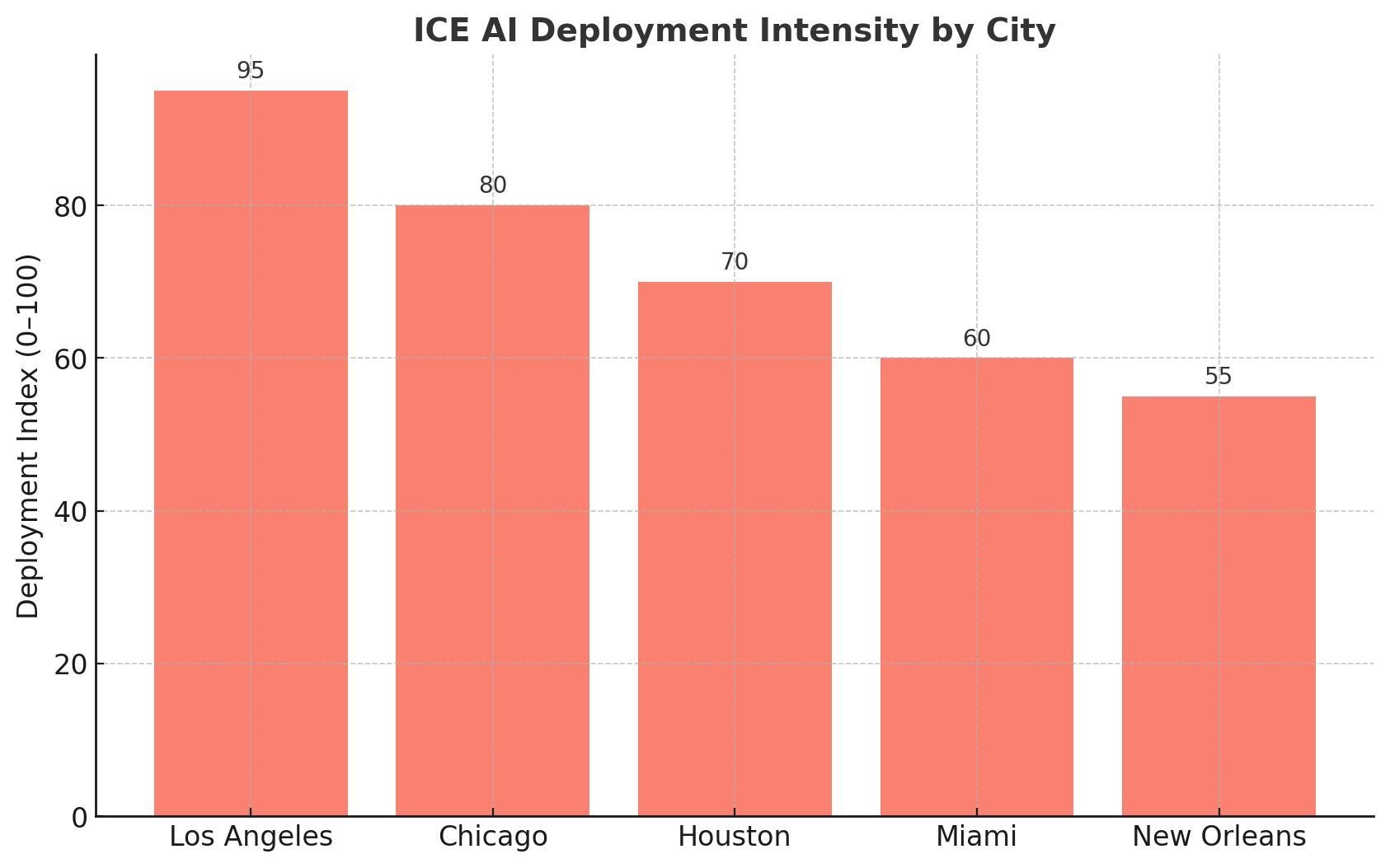

- Bar Chart: ICE Spending on AI contracts (2018–2025)

- Heatmap: Cities with highest AI deployment (LA, Chicago, Houston, Miami, New Orleans)

Protect Yourself in the Age of AI-Driven Immigration Enforcement — Contact Attorney Richard T. Herman

If you are concerned about how ICE or USCIS uses artificial intelligence and surveillance technology in immigration enforcement, you need experienced legal guidance. Attorney Richard T. Herman offers over 30 years of immigration law expertise, providing personalized strategy sessions that help you understand risks, protect your rights, and build a clear path forward.

Why This Moment Demands Expert Legal Guidance

Artificial intelligence is no longer a futuristic concept — it is shaping who ICE targets, how raids are planned, and what cases USCIS flags for fraud or denial. Systems like ImmigrationOS combine immigration records with IRS filings, financial transactions, and even Census data. These algorithms can decide whether your case receives scrutiny, whether your name surfaces in a raid, or whether your green card or citizenship application faces added hurdles.

The problem? These decisions are often opaque, biased, and nearly impossible to appeal. AI-driven immigration enforcement raises significant human rights concerns, including the risk of discrimination and lack of due process. If you are navigating the immigration system today — as a green card holder, visa applicant, asylum seeker, or U.S. citizen applying for naturalization — you cannot afford to ignore the role AI may play in your case.

This is where Attorney Richard T. Herman can make the difference.

Why Work with Richard Herman

- Proven Experience: With more than 30 years practicing immigration law nationwide, Richard has handled cases across the spectrum — family immigration, employment visas, deportation defense, investor visas, asylum, and naturalization.

- Cutting-Edge Knowledge: Richard follows the latest developments in AI, government surveillance, and enforcement policies, so you know exactly what risks exist and how to prepare for them.

- Personalized Consultations: Every consultation is with Richard directly, not with an intake clerk. You get a full hour of focused attention, where your history is carefully reviewed, and a tailored strategy is built for your specific situation.

- Education and Empowerment: Richard doesn’t just tell you what to do — he explains the law, shares government resources, and ensures you leave the consultation with the knowledge to make informed decisions.

- Trusted Advocate: As co-author of Immigrant, Inc., and a nationally recognized advocate for immigrants, Richard has built a reputation as both a fierce defender and a compassionate counselor.

What You’ll Gain from a Consultation

When you schedule your one-on-one strategy session with Richard Herman, you’ll:

- Learn how ICE and USCIS are currently using AI and surveillance technology — and what that means for you.

- Understand whether your history, records, or application may trigger AI-driven scrutiny or enforcement flags.

- Identify risks before the government does, allowing you to prepare defenses and strengthen your case.

- Gain insight into how to assess whether the AI systems used in immigration enforcement follow principles of trustworthy development, such as transparency and fairness.

- Receive links to official resources from DHS, USCIS, ICE, and the Treasury, so you can study and understand the technology yourself.

- Leave with a personalized immigration roadmap that accounts for today’s enforcement environment.

Why Delay Could Be Dangerous

Every month, ICE expands its AI contracts. Every day, USCIS runs fraud-detection algorithms across new applications. Ongoing technological advancements in AI are continually changing the landscape of immigration enforcement. You may not know if you are already in an algorithmic “risk” category until it’s too late.

By acting now — before filing, before traveling, before responding to a notice — you can take control of your future and ensure that you are not blindsided by opaque technology.

Take the First Step — Protect Your Rights

If you have questions or concerns about how ICE or USCIS is using artificial intelligence and surveillance tools, don’t wait until you’re facing a denial, raid, or removal hearing.

Contact Attorney Richard T. Herman today for a comprehensive, one-on-one consultation.

- 👉 Schedule Your Consultation Here

- 📞 Or call (216) 696-6170 to speak directly with his office.

Your future in America is too important to leave to chance — or to an algorithm. With Richard Herman by your side, you’ll have the knowledge, strategy, and protection you need in this new era of AI-driven immigration enforcement.

U.S. Government Resources

Department of Homeland Security (DHS) – AI, Data, Privacy

- DHS Artificial Intelligence Hub — Strategy, governance, pilots, and AI inventories across DHS components.

- DHS Privacy Office — Privacy Impact Assessments (PIAs), System of Records Notices (SORNs), and annual reports.

- DHS Data Strategy & Open Data — Datasets, open-government plans, and transparency reports.

U.S. Citizenship and Immigration Services (USCIS)

- USCIS Policy Manual — Adjudication standards; watch for AI-related fraud detection references.

- USCIS FOIA Reading Room — Released records on automation, vendor tools, and program guidance.

- USCIS Forms, Biometrics & Background Checks — Official guidance on biometrics and vetting.

- USCIS Data & Reports — Workload stats, approvals/denials, processing trends that intersect with automated triage.

U.S. Immigration and Customs Enforcement (ICE)

- ICE Official Site — Enforcement directives, alternatives-to-detention, and technology initiatives.

- ICE FOIA Library — Contracts, tech specs, and guidance on investigative tools and analytics.

- ICE Detention & ATD Data— ATD (e.g., electronic monitoring) reports where risk-scoring/AI may be referenced.

U.S. Customs and Border Protection (CBP)

- CBP Biometrics (Entry/Exit) — Face/biometric matching programs, policies, and privacy statements.

- CBP FOIA Electronic Reading Room— Tech program records and vendor materials.

Department of Justice (DOJ) & EOIR

- DOJ Office of Privacy and Civil Liberties — DOJ-wide privacy guidance.

- Executive Office for Immigration Review (EOIR) — Immigration courts; relevant for how tech-driven referrals reach proceedings.

- DOJ Office of the Inspector General (OIG) — Audits and investigations touching law-enforcement tech use.

Department of the Treasury & FinCEN (Bank Secrecy Act)

- Financial Crimes Enforcement Network (FinCEN) — SARs/BSA compliance that may be cross-referenced in immigration analytics.

- U.S. Department of the Treasury — Policy statements and interagency data-sharing references.

U.S. Census Bureau

- Census Privacy & Data Protection — Statistical confidentiality and data-use policies relevant to any cross-agency integrations.

Internal Revenue Service (IRS)

- IRS Privacy, Confidentiality & Data Security — Data safeguards (e.g., Publication 1075) for any interagency data access.

White House / Federal-wide AI Policy

- White House (OSTP) Blueprint for an AI Bill of Rights — Principles on automated systems, due process, and discrimination.

- OMB Memoranda on AI — Implementation guidance for federal AI governance and risk management.

- AI.gov (Federal AI Portal) — Cross-agency AI initiatives, governance, and resources.

Standards, Risk Management, and Cyber

- NIST AI Risk Management Framework — Federal gold standard for managing AI bias, transparency, and safety.

- NIST Privacy Framework — Strategy for balancing data utility with privacy protections.

- CISA (Cybersecurity & Infrastructure Security Agency) — Cybersecurity advisories for systems underpinning immigration tech.

Audits, Oversight & Accountability

- DHS Office of Inspector General (OIG) — Audits of ICE/USCIS/CBP programs, including IT controls and data governance.

- U.S. Government Accountability Office (GAO) — Independent audits/reports on federal AI, information-sharing, and law-enforcement technology.

- U.S. Office of Special Counsel (OSC) Whistleblower Disclosures — Pathway for reporting misuse of tech or data.

Rulemaking, Registers & Public Comment

- Federal Register — Proposed/Final Rules and Notices for DHS/DOJ technologies, data-matching programs, and PIAs/SORNs.

- Regulations.gov — Submit/read public comments on DHS/DOJ rulemakings involving automation or surveillance.

FOIA Portals (Cross-Agency)

- FOIA.gov — Central FOIA portal to request tech contracts, PIAs, guidelines.

- DHS Component FOIA (ICE/USCIS/CBP) — Direct FOIA filing for component-level records.

Professional Associations, Research Orgs & NGOs

Immigration-Focused

- American Immigration Lawyers Association (AILA) — Practice advisories on enforcement trends, automation risks, and litigation updates.

- American Immigration Council (AIC) — Research on immigration enforcement, data use, and civil liberties.

- National Immigration Project (NIPNLG) — Litigation resources and policy analysis on surveillance and due process.

- ABA Commission on Immigration — Ethical practice and due process guidance for immigration lawyers.

- Migration Policy Institute (MPI) — Nonpartisan analysis on immigration data systems and tech in enforcement.

Civil Liberties & Digital Rights

- Electronic Frontier Foundation (EFF) — Technical and legal analysis of surveillance, facial recognition, and data fusion.

- Center for Democracy & Technology (CDT) — Policy advocacy on algorithmic accountability and government tech.

- Electronic Privacy Information Center (EPIC) — FOIA litigation and reports on automated decision systems in government.

- American Civil Liberties Union (ACLU) — Litigation and reports on government surveillance and immigrant rights.

- Brennan Center for Justice — Research on law-enforcement technology, data sharing, and civil liberties.

Technology, Ethics & Compliance

- IAPP (International Association of Privacy Professionals) — Practitioner guidance on privacy governance, DPIAs, and AI compliance.

- IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems— Ethical design standards relevant to government AI.

- ACM U.S. Technology Policy Committee — Technical statements on algorithmic accountability and transparency.

- Partnership on AI — Multi-stakeholder best practices for responsible AI systems.

Public Interest Data & Journalism

- TRAC at Syracuse University— Detailed immigration enforcement and court data (non-government but widely cited).

- The Markup — Investigations into algorithmic impacts and surveillance tools.

How to Use This List (Quick Integration Tips)

- Cite PIAs/SORNs whenever describing specific systems (e.g., facial recognition, data matching). Link directly to the relevant DHS Privacy Office page.

- When discussing AI governance, anchor claims to NIST AI RMF for risk, bias, and transparency standards; cross-reference OMB memos for federal implementation.

- For enforcement practice impacts, pair ICE/CBP FOIA Library links with GAO or DHS OIG audits to show oversight findings.

- For client-facing guides, include FOIA.gov and Regulations.gov so readers can request records and comment on proposed rules.

- Complement government citations with highly credible professional associations (AILA, AIC, NIPNLG) for practitioner-level interpretation and litigation context.

I